What is Correlation Analysis? Definition, Process, Examples

Appinio Research · 07.11.2023 · 30min read

Content

Are you curious about how different variables interact and influence each other? Correlation analysis is the key to unlocking these relationships in your data. In this guide, we'll dive deep into correlation analysis, exploring its definition, methods, applications, and practical examples.

Whether you're a data scientist, researcher, or business professional, understanding correlation analysis will empower you to make informed decisions, manage risks, and uncover valuable insights from your data. Let's embark on this exploration of correlation analysis and discover its significance in various domains.

What is Correlation Analysis?

Correlation analysis is a statistical technique used to measure and evaluate the strength and direction of the relationship between two or more variables. It helps identify whether changes in one variable are associated with changes in another and quantifies the degree of this association.

Purpose of Correlation Analysis

The primary purpose of correlation analysis is to:

- Discover Relationships: Correlation analysis helps researchers and analysts identify patterns and relationships between variables in their data. It answers questions like, "Do these variables move together or in opposite directions?"

- Quantify Relationships: Correlation analysis quantifies the strength and direction of associations between variables, providing a numerical measure that allows for comparisons and objective assessments.

- Predictive Insights: Correlation analysis can be used for predictive purposes. If two variables show a strong correlation, changes in one variable can be used to predict changes in the other, which is valuable for forecasting and decision-making.

- Data Reduction: In multivariate analysis, correlation analysis can help identify redundant variables. Highly correlated variables may carry similar information, allowing analysts to simplify their models and reduce dimensionality.

- Diagnostics: In fields like healthcare and finance, correlation analysis is used for diagnostic purposes. For instance, it can reveal correlations between symptoms and diseases or between financial indicators and market trends.

Importance of Correlation Analysis

- Decision-Making: Correlation analysis provides crucial insights for informed decision-making. For example, in finance, understanding the correlation between assets helps in portfolio diversification, risk management, and asset allocation decisions. In business, it aids in assessing the effectiveness of marketing strategies and identifying factors influencing sales.

- Risk Assessment: Correlation analysis is essential for risk assessment and management. In financial risk analysis, it helps identify how assets within a portfolio move concerning each other. Highly positively correlated assets can increase risk, while negatively correlated assets can provide diversification benefits.

- Scientific Research: In scientific research, correlation analysis is a fundamental tool for understanding relationships between variables. For example, healthcare research can uncover correlations between patient characteristics and health outcomes, leading to improved treatments and interventions.

- Quality Control: In manufacturing and quality control, correlation analysis can be used to identify factors that affect product quality. For instance, it helps determine whether changes in manufacturing processes correlate with variations in product specifications.

- Predictive Modeling: Correlation analysis is a precursor to building predictive models. Variables with strong correlations may be used as predictors in regression models to forecast outcomes, such as predicting customer churn based on their usage patterns and demographics.

- Identifying Confounding Factors: In epidemiology and social sciences, correlation analysis is used to identify confounding factors. When studying the relationship between two variables, a third variable may confound the association. Correlation analysis helps researchers identify and account for these confounders.

In summary, correlation analysis is a versatile and indispensable statistical tool with broad applications in various fields. It helps reveal relationships, assess risks, make informed decisions, and advance scientific understanding, making it a valuable asset in data analysis and research.

Types of Correlation

Correlation analysis involves examining the relationship between variables. There are several methods to measure correlation, each suited for different types of data and situations. In this section, we'll explore three main types of correlation:

Pearson Correlation Coefficient

The Pearson Correlation Coefficient, often referred to as Pearson's "r," is the most widely used method to measure linear relationships between continuous variables. It quantifies the strength and direction of a linear association between two variables.

Spearman Rank Correlation

Spearman Rank Correlation, also known as Spearman's "ρ" (rho), is a non-parametric method used to measure the strength and direction of the association between two variables. It is particularly beneficial when dealing with non-linear relationships or ordinal data.

Kendall Tau Correlation

Kendall Tau Correlation, often denoted as "τ" (tau), is another non-parametric method for assessing the association between two variables. It is advantageous when dealing with small sample sizes or data with ties (values that occur more than once).

How to Prepare Data for Correlation Analysis?

Before diving into correlation analysis, you must ensure your data is well-prepared to yield meaningful results. Proper data preparation is crucial for accurate and reliable outcomes. Let's explore the essential steps involved in preparing your data.

1. Data Collection

- Identify Relevant Variables: Determine which variables you want to analyze for correlation. These variables should be logically connected or hypothesized to have an association.

- Data Sources: Collect data from reliable sources, ensuring that it is representative of the population or phenomenon you are studying.

- Data Quality: Check for data quality issues such as missing values, outliers, or errors during the data collection process.

2. Data Cleaning

- Handling Missing Data: Decide on an appropriate strategy for dealing with missing values. You can either impute missing data or exclude cases with missing values, depending on the nature of your analysis and the extent of missing data.

- Duplicate Data: Detect and remove duplicate entries to avoid skewing your analysis.

- Data Transformation: If necessary, perform data transformations like normalization or standardization to ensure that variables are on the same scale.

3. Handling Missing Values

- Types of Missing Data: Understand the types of missing data, such as missing completely at random (MCAR), missing at random (MAR), or missing not at random (MNAR).

- Imputation Methods: Choose appropriate imputation methods, such as mean imputation, median imputation, or regression imputation, based on the missing data pattern and the nature of your variables.

4. Outlier Detection and Treatment

- Identifying Outliers: Utilize statistical methods or visualizations (e.g., box plots, scatter plots) to identify outliers in your data.

- Treatment Options: Decide whether to remove outliers, transform them, or leave them in your dataset based on the context and objectives of your analysis.

Effective data preparation sets the stage for robust correlation analysis. By following these steps, you ensure that your data is clean, complete, and ready for meaningful insights. In the subsequent sections of this guide, we will delve deeper into the calculations, interpretations, and practical applications of correlation analysis.

Pearson Correlation Coefficient

The Pearson Correlation Coefficient, often referred to as Pearson's "r," is a widely used statistical measure for quantifying the strength and direction of a linear relationship between two continuous variables. Understanding how to calculate, interpret, and recognize the strength and direction of this correlation is essential.

Calculation

The formula for calculating the Pearson correlation coefficient is as follows:

r = (Σ((X - X̄)(Y - Ȳ))) / (n-1)

Where:

- X and Y are the variables being analyzed.

- X̄ and Ȳ are the means (averages) of X and Y.

- n is the number of data points.

To calculate "r," you take the sum of the products of the deviations of individual data points from their respective means for both variables. The division by (n-1) represents the degrees of freedom, ensuring that the sample variance is unbiased.

Interpretation

Interpreting the Pearson correlation coefficient is crucial for understanding the nature of the relationship between two variables:

- Positive correlation (r > 0): When "r" is positive, it indicates a positive linear relationship. This means that as one variable increases, the other tends to increase as well.

- Negative correlation (r < 0): A negative "r" value suggests a negative linear relationship, implying that as one variable increases, the other tends to decrease.

- No Correlation (r ≈ 0): If "r" is close to 0, there is little to no linear relationship between the variables. In this case, changes in one variable are not associated with consistent changes in the other.

Strength and Direction of Correlation

The magnitude of the Pearson correlation coefficient "r" indicates the strength of the correlation:

- Strong Correlation: When |r| is close to 1 (either positive or negative), it suggests a strong linear relationship. A value of 1 indicates a perfect linear relationship, while -1 indicates a perfect negative linear relationship.

- Weak Correlation: When |r| is closer to 0, it implies a weaker linear relationship. The closer "r" is to 0, the weaker the correlation.

The sign of "r" (+ or -) indicates the direction of the correlation:

- Positive Correlation: A positive "r" suggests that as one variable increases, the other tends to increase. The variables move in the same direction.

- Negative Correlation: A negative "r" suggests that as one variable increases, the other tends to decrease. The variables move in opposite directions.

Assumptions and Limitations

It's essential to be aware of the assumptions and limitations of the Pearson correlation coefficient:

- Linearity: Pearson correlation assumes that there is a linear relationship between the variables. If the relationship is not linear, Pearson's correlation may not accurately capture the association.

- Normal Distribution: It assumes that both variables are normally distributed. If this assumption is violated, the results may be less reliable.

- Outliers: Outliers can have a significant impact on the Pearson correlation coefficient. Extreme values may distort the correlation results.

- Independence: It assumes that the data points are independent of each other.

Understanding these assumptions and limitations is vital when interpreting the results of Pearson correlation analysis. In cases where these assumptions are not met, other correlation methods like Spearman or Kendall Tau may be more appropriate.

Spearman Rank Correlation

Spearman Rank Correlation, also known as Spearman's "ρ" (rho), is a non-parametric method used to measure the strength and direction of the association between two variables. This method is valuable when dealing with non-linear relationships or ordinal data.

Calculation

To calculate Spearman Rank Correlation, you need to follow these steps:

- Rank the values of each variable separately. Assign the lowest rank to the smallest value and the highest rank to the largest value.

- Calculate the differences between the ranks for each pair of data points for both variables.

Square the differences and sum them for all data points. - Use the formula for Spearman's rho:

ρ = 1 - ((6 * Σd²) / (n(n² - 1)))

Where:

- ρ is the Spearman rank correlation coefficient.

- Σd² is the sum of squared differences in ranks.

- n is the number of data points.

When to Use Spearman Correlation?

Spearman Rank Correlation is particularly useful in the following scenarios:

- When the relationship between variables is not strictly linear, as it does not assume linearity.

- When dealing with ordinal data, where values have a natural order but are not equidistant.

- When your data violates the assumptions of the Pearson correlation coefficient, such as normality and linearity.

Interpretation

Interpreting Spearman's rho is similar to interpreting Pearson correlation:

- A positive ρ indicates a positive monotonic relationship, meaning that as one variable increases, the other tends to increase.

- A negative ρ suggests a negative monotonic relationship, where as one variable increases, the other tends to decrease.

- A ρ close to 0 implies little to no monotonic association between the variables.

Spearman Rank Correlation is robust and versatile, making it a valuable tool for analyzing relationships in a variety of data types and scenarios.

Kendall Tau Correlation

The Kendall Tau Correlation, often denoted as "τ" (tau), is a non-parametric measure used to assess the strength and direction of association between two variables. Kendall Tau is particularly valuable when dealing with small sample sizes, non-linear relationships, or data that violates the assumptions of the Pearson correlation coefficient.

Calculation

Calculating Kendall Tau Correlation involves counting concordant and discordant pairs of data points. Here's how it's done:

- For each pair of data points (Xi, Xj) and (Yi, Yj), determine whether they are concordant or discordant.

- Concordant pairs: If Xi < Xj and Yi < Yj or Xi > Xj and Yi > Yj.

- Discordant pairs: If Xi < Xj and Yi > Yj or Xi > Xj and Yi < Yj.

- Count the number of concordant pairs (C) and discordant pairs (D).

- Use the formula for Kendall's Tau:

τ = (C - D) / (0.5 * n * (n - 1))

Where:

- τ is the Kendall Tau correlation coefficient.

- C is the number of concordant pairs.

- D is the number of discordant pairs.

- n is the number of data points.

Advantages of Kendall Tau

Kendall Tau Correlation offers several advantages, making it a robust choice in various scenarios:

- Robust to Outliers: Kendall Tau is less sensitive to outliers compared to Pearson correlation, making it suitable for data with extreme values.

- Small Sample Sizes: It performs well with small sample sizes, making it applicable even when you have limited data.

- Non-Parametric: Kendall Tau is non-parametric, meaning it doesn't assume specific data distributions, making it versatile for various data types.

- No Assumption of Linearity: Unlike Pearson correlation, Kendall Tau does not assume a linear relationship between variables, making it suitable for capturing non-linear associations.

Interpretation

Interpreting Kendall Tau correlation follows a similar pattern to Pearson and Spearman correlation:

- Positive τ (τ > 0): Indicates a positive association between variables. As one variable increases, the other tends to increase.

- Negative τ (τ < 0): Suggests a negative association. As one variable increases, the other tends to decrease.

- τ Close to 0: Implies little to no association between the variables.

Kendall Tau is a valuable tool when you want to explore associations in your data without making strong assumptions about data distribution or linearity.

How to Interpret Correlation Results?

Once you've calculated correlation coefficients, the next step is interpreting the results. Understanding how to make sense of the correlation values and what they mean for your analysis is crucial.

Correlation Heatmaps

Correlation heatmaps are visual representations of correlation coefficients between multiple variables. They provide a quick and intuitive way to identify patterns and relationships in your data.

- Positive Correlation (High Values): Variables with high positive correlations appear as clusters of bright colors (e.g., red or yellow) in the heatmap.

- Negative Correlation (Low Values): Variables with high negative correlations are shown as clusters of dark colors (e.g., blue or green) in the heatmap.

- No Correlation (Values Close to 0): Variables with low or no correlation appear as a neutral color (e.g., white or gray) in the heatmap.

Correlation heatmaps are especially useful when dealing with a large number of variables, helping you identify which pairs exhibit strong associations.

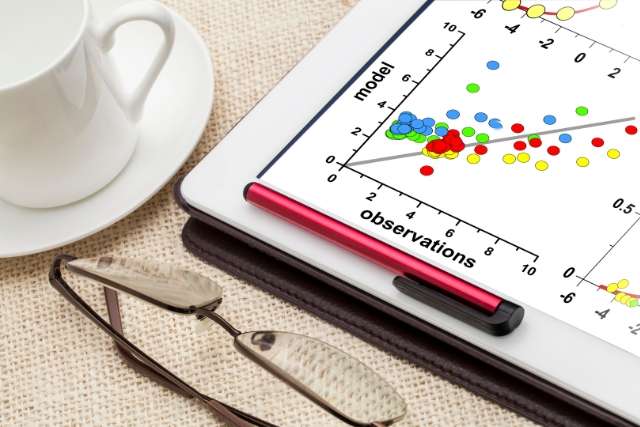

Scatterplots

Scatterplots are graphical representations of data points on a Cartesian plane, with one variable on the x-axis and another on the y-axis. They are valuable for visualizing the relationship between two continuous variables.

- Positive Correlation: In a positive correlation, data points on the scatterplot tend to form an upward-sloping pattern, suggesting that as one variable increases, the other tends to increase.

- Negative Correlation: A negative correlation is represented by a downward-sloping pattern, indicating that as one variable increases, the other tends to decrease.

- No Correlation: When there is no correlation, data points are scattered randomly without forming any distinct pattern.

Scatterplots provide a clear and intuitive way to assess the direction and strength of the correlation between two variables.

Statistical Significance

It's crucial to determine whether the observed correlation is statistically significant. Statistical significance helps you assess whether the correlation is likely due to random chance or if it reflects a true relationship between the variables.

Common methods for assessing statistical significance include hypothesis testing (e.g., t-tests) or calculating p-values. A low p-value (typically less than 0.05) indicates that the correlation is likely not due to chance and is statistically significant.

Understanding statistical significance helps you confidently draw conclusions from your correlation analysis and make informed decisions based on your findings. Discover the hidden truths beyond the golden 0.05 threshold in our exclusive webinar recording, "From Zero to Significance." Dive deep into the intricacies and pitfalls of significance testing with Louise Leitsch, our Director of Research, as she demystifies market research jargon and simplifies complex concepts like P-value and alpha inflation.

Gain invaluable insights that guarantee reliable results and elevate your research game to new heights. Don't miss out — watch now!

Common Mistakes in Correlation Analysis

While correlation analysis is a powerful tool for uncovering relationships in data, it's essential to be aware of common mistakes and pitfalls that can lead to incorrect conclusions. Here are some of the most prevalent issues to watch out for:

Causation vs. Correlation

Mistake: Assuming that correlation implies causation is a common error in data analysis. Correlation only indicates that two variables are associated or vary together; it does not establish a cause-and-effect relationship.

Example: Suppose you find a strong positive correlation between ice cream sales and the number of drowning incidents during the summer months. Concluding that eating ice cream causes drowning would be a mistake. The common factor here is hot weather, which drives both ice cream consumption and swimming, leading to an apparent correlation.

Solution: Always exercise caution when interpreting correlation. To establish causation, you need additional evidence from controlled experiments or a thorough understanding of the underlying mechanisms.

Confounding Variables

Mistake: Ignoring or failing to account for confounding variables can lead to misleading correlation results. Confounding variables are external factors that affect both of the variables being studied, making it appear that there is a correlation when there isn't one.

Example: Suppose you are analyzing the relationship between the number of sunscreen applications and the incidence of sunburn. You find a negative correlation, suggesting that more sunscreen leads to more sunburn. However, the confounding variable is the time spent in the sun, which affects both sunscreen application and sunburn risk.

Solution: Be vigilant about potential confounding variables and either control for them in your analysis or consider their influence on the observed correlation.

Sample Size Issues

Mistake: Drawing strong conclusions from small sample sizes can be misleading. Small samples can result in less reliable correlation estimates and may not be representative of the population.

Example: If you have only ten data points and find a strong correlation, it's challenging to generalize that correlation to a larger population with confidence.

Solution: Whenever possible, aim for larger sample sizes to improve the robustness of your correlation analysis. Statistical tests can help determine whether the observed correlation is statistically significant, given the sample size. You can also leverage the Appinio sample size calculator to determine the necessary sample size.

Applications of Correlation Analysis

Correlation analysis has a wide range of applications across various fields. Understanding the relationships between variables can provide valuable insights for decision-making and research. Here are some notable applications in different domains:

Business and Finance

- Stock Market Analysis: Correlation analysis can help investors and portfolio managers assess the relationships between different stocks and assets. Understanding correlations can aid in diversifying portfolios to manage risk.

- Marketing Effectiveness: Businesses use correlation analysis to determine the impact of marketing strategies on sales, customer engagement, and other key performance metrics.

- Risk Management: In financial institutions, correlation analysis is crucial for assessing the interdependence of assets and estimating risk exposure in portfolios.

Healthcare and Medicine

- Drug Efficacy: Researchers use correlation analysis to assess the correlation between drug dosage and patient response. It helps determine the appropriate drug dosage for specific conditions.

- Disease Research: Correlation analysis is used to identify potential risk factors and correlations between various health indicators and the occurrence of diseases.

- Clinical Trials: In clinical trials, correlation analysis is employed to evaluate the correlation between treatment interventions and patient outcomes.

Social Sciences

- Education: Educational researchers use correlation analysis to explore relationships between teaching methods, student performance, and various socioeconomic factors.

- Sociology: Correlation analysis is applied to study correlations between social variables, such as income, education, and crime rates.

- Psychology: Psychologists use correlation analysis to investigate relationships between variables like stress levels, behavior, and mental health outcomes.

These are just a few examples of how correlation analysis is applied across diverse fields. Its versatility makes it a valuable tool for uncovering associations and guiding decision-making in many areas of research and practice.

Correlation Analysis in Python

Python is a widely used programming language for data analysis and offers several libraries that facilitate correlation analysis. In this section, we'll explore how to perform correlation analysis using Python, including the use of libraries like NumPy and pandas. We'll also provide code examples to illustrate the process.

Using Libraries

NumPy

NumPy is a fundamental library for numerical computing in Python. It provides essential tools for working with arrays and performing mathematical operations, making it valuable for correlation analysis.

To calculate the Pearson correlation coefficient using NumPy, you can use the numpy.corrcoef() function:

import numpy as np

# Create two arrays (variables)

variable1 = np.array([1, 2, 3, 4, 5])

variable2 = np.array([5, 4, 3, 2, 1])

# Calculate Pearson correlation coefficient

correlation_coefficient = np.corrcoef(variable1, variable2)[0, 1]

print(f"Pearson Correlation Coefficient: {correlation_coefficient}")

pandas

pandas is a powerful data manipulation library in Python. It provides a convenient DataFrame structure for handling and analyzing data.

To perform correlation analysis using pandas, you can use the pandas.DataFrame.corr() method:

import pandas as pd

# Create a DataFrame with two columns

data = {'Variable1': [1, 2, 3, 4, 5],

'Variable2': [5, 4, 3, 2, 1]}

df = pd.DataFrame(data)

# Calculate Pearson correlation coefficient

correlation_matrix = df.corr()

pearson_coefficient = correlation_matrix.loc['Variable1', 'Variable2']

print(f"Pearson Correlation Coefficient: {pearson_coefficient}")

Code Examples

Pearson Correlation Coefficient

import numpy as np

# Create two arrays (variables)

variable1 = np.array([1, 2, 3, 4, 5])

variable2 = np.array([5, 4, 3, 2, 1])

# Calculate Pearson correlation coefficient

correlation_coefficient = np.corrcoef(variable1, variable2)[0, 1]

print(f"Pearson Correlation Coefficient: {correlation_coefficient}")

Spearman Rank Correlation

import scipy.stats

# Create two arrays (variables)

variable1 = [1, 2, 3, 4, 5]

variable2 = [5, 4, 3, 2, 1]

# Calculate Spearman rank correlation coefficient

spearman_coefficient, _ = scipy.stats.spearmanr(variable1, variable2)

print(f"Spearman Rank Correlation Coefficient: {spearman_coefficient}")

Kendall Tau Correlation

import scipy.stats

# Create two arrays (variables)

variable1 = [1, 2, 3, 4, 5]

variable2 = [5, 4, 3, 2, 1]

# Calculate Kendall Tau correlation coefficient

kendall_coefficient, _ = scipy.stats.kendalltau(variable1, variable2)

print(f"Kendall Tau Correlation Coefficient: {kendall_coefficient}")

These code examples demonstrate how to calculate correlation coefficients using Python and its libraries. You can apply these techniques to your own datasets and analyses, depending on the type of correlation you want to measure.

Correlation Analysis in R

R is a powerful statistical programming language and environment that excels in data analysis and visualization. In this section, we'll explore how to perform correlation analysis in R, utilizing libraries like corrplot and psych. Additionally, we'll provide code examples to demonstrate the process.

Using Libraries

corrplot

corrplot is a popular R package for creating visually appealing correlation matrices and correlation plots. It provides various options for customizing the appearance of correlation matrices, making it an excellent choice for visualizing relationships between variables.

To use corrplot, you need to install and load the package:

psych

The psych package in R provides a wide range of functions for psychometrics, including correlation analysis. It offers functions for calculating correlation matrices, performing factor analysis, and more.

To use psych, you should install and load the package:

Code Examples

Pearson Correlation Coefficient

# Create two vectors (variables)

variable1 <- c(1, 2, 3, 4, 5)

variable2 <- c(5, 4, 3, 2, 1)

# Calculate Pearson correlation coefficient

pearson_coefficient <- cor(variable1, variable2, method = "pearson")

print(paste("Pearson Correlation Coefficient:", round(pearson_coefficient, 2)))

Spearman Rank Correlation

# Create two vectors (variables)

variable1 <- c(1, 2, 3, 4, 5)

variable2 <- c(5, 4, 3, 2, 1)

# Calculate Spearman rank correlation coefficient

spearman_coefficient <- cor(variable1, variable2, method = "spearman")

print(paste("Spearman Rank Correlation Coefficient:", round(spearman_coefficient, 2)))

Kendall Tau Correlation

# Create two vectors (variables)

variable1 <- c(1, 2, 3, 4, 5)

variable2 <- c(5, 4, 3, 2, 1)

# Calculate Kendall Tau correlation coefficient

kendall_coefficient <- cor(variable1, variable2, method = "kendall")

print(paste("Kendall Tau Correlation Coefficient:", round(kendall_coefficient, 2)))

These code examples illustrate how to calculate correlation coefficients using R, specifically focusing on the Pearson, Spearman Rank, and Kendall Tau correlation methods. You can apply these techniques to your own datasets and analyses in R, depending on your specific research or data analysis needs.

Correlation Analysis Examples

Now that we've covered the fundamentals of correlation analysis, let's explore practical examples that showcase how correlation analysis can be applied to real-world scenarios. These examples will help you understand the relevance and utility of correlation analysis in various domains.

Example 1: Finance and Investment

Scenario:

Suppose you are an investment analyst working for a hedge fund, and you want to evaluate the relationship between two stocks: Stock A and Stock B. Your goal is to determine whether there is a correlation between the daily returns of these stocks.

Steps:

- Data Collection: Gather historical daily price data for both Stock A and Stock B.

- Data Preparation: Calculate the daily returns for each stock, which can be done by taking the percentage change in the closing price from one day to the next.

- Correlation Analysis: Use correlation analysis to measure the correlation between the daily returns of Stock A and Stock B. You can calculate the Pearson correlation coefficient, which will indicate the strength and direction of the relationship.

- Interpretation: If the correlation coefficient is close to 1, it suggests a strong positive correlation, meaning that when Stock A goes up, Stock B tends to go up as well. If it's close to -1, it indicates a strong negative correlation, implying that when one stock rises, the other falls. A correlation coefficient close to 0 suggests little to no linear relationship.

- Portfolio Management: Based on the correlation analysis results, you can decide whether it makes sense to include both stocks in your portfolio. If they are highly positively correlated, adding both may not provide adequate diversification. Conversely, if they are negatively correlated, they may serve as a good hedge against each other.

Example 2: Healthcare and Medical Research

Scenario:

You are a researcher studying the relationship between patients' Body Mass Index (BMI) and their cholesterol levels. Your objective is to determine if there is a correlation between BMI and cholesterol levels among a sample of patients.

Steps:

- Data Collection: Collect data from a sample of patients, including their BMI and cholesterol levels.

- Data Preparation: Ensure that the data is clean and there are no missing values. You may need to categorize BMI levels if you want to explore categorical correlations.

- Correlation Analysis: Perform correlation analysis to calculate the Pearson correlation coefficient between BMI and cholesterol levels. This will help you quantify the strength and direction of the relationship.

- Interpretation: If the Pearson correlation coefficient is positive and significant, it suggests that as BMI increases, cholesterol levels tend to increase. A negative coefficient would indicate the opposite. A correlation close to 0 implies little to no linear relationship.

- Clinical Implications: Use the correlation analysis results to inform clinical decisions. For example, if there is a strong positive correlation, healthcare professionals may consider monitoring cholesterol levels more closely in patients with higher BMI.

Example 3: Education and Student Performance

Scenario:

As an educational researcher, you are interested in understanding the factors that influence student performance in a high school setting. You want to explore the correlation between variables such as student attendance, hours spent studying, and exam scores.

Steps:

- Data Collection: Collect data from a sample of high school students, including their attendance records, hours spent studying per week, and exam scores.

- Data Preparation: Ensure data quality, handle any missing values, and categorize variables if necessary.

- Correlation Analysis: Use correlation analysis to calculate correlation coefficients, such as the Pearson coefficient, between attendance, study hours, and exam scores. This will help identify which factors, if any, are correlated with student performance.

- Interpretation: Analyze the correlation coefficients to determine the strength and direction of the relationships. For instance, a positive correlation between attendance and exam scores would suggest that students with better attendance tend to perform better academically.

- Educational Interventions: Based on the correlation analysis findings, academic institutions can implement targeted interventions. For example, if there is a negative correlation between study hours and exam scores, educators may encourage students to allocate more time to studying.

These practical examples illustrate how correlation analysis can be applied to different fields, including finance, healthcare, and education. By understanding the relationships between variables, organizations and researchers can make informed decisions, optimize strategies, and improve outcomes in their respective domains.

Conclusion for Correlation Analysis

Correlation analysis is a powerful tool that allows us to understand the connections between different variables. By quantifying these relationships, we gain insights that help us make better decisions, manage risks, and improve outcomes in various fields like finance, healthcare, and education.

So, whether you're analyzing stock market trends, researching medical data, or studying student performance, correlation analysis equips you with the knowledge to uncover meaningful connections and make data-driven choices. Embrace the power of correlation analysis in your data journey, and you'll find that it's an essential compass for navigating the complex landscape of information and decision-making.

How to Conduct Correlation Analysis in Minutes?

In the world of data-driven decision-making, Appinio is your go-to partner for real-time consumer insights. We've redefined market research, making it exciting, intuitive, and seamlessly integrated into everyday choices. When it comes to correlation analysis, here's why you'll love Appinio:

- Lightning-Fast Insights: Say goodbye to waiting. With Appinio, you'll turn questions into insights in minutes, not days.

- No Research Degree Required: Our platform is so user-friendly that anyone can master it, no PhD in research needed.

- Global Reach, Local Expertise: Survey your ideal target group from 1200+ characteristics across 90+ countries. Our dedicated research consultants are with you every step of the way.

Get facts and figures 🧠

Want to see more data insights? Our free reports are just the right thing for you!