What is Predictive Modeling? Definition, Types, Techniques

Appinio Research · 21.03.2024 · 28min read

Content

Have you ever wondered how companies predict which products you're likely to buy, or how healthcare providers forecast the likelihood of certain diseases? Predictive modeling holds the key to unlocking these mysteries by analyzing data patterns to make informed predictions about future outcomes. From identifying fraudulent transactions in finance to optimizing inventory management in retail, predictive modeling plays a vital role in various industries, helping businesses make smarter decisions and improve efficiency.

In this guide, we'll dive deep into the world of predictive modeling, exploring its fundamentals, techniques, real-world applications, and best practices. Whether you're a data enthusiast eager to learn new skills or a business professional seeking insights to drive strategic decisions, this guide will equip you with the knowledge and tools needed to harness the power of predictive modeling effectively.

What is Predictive Modeling?

Predictive modeling is a technique used in data science and machine learning to predict future outcomes based on historical data and existing patterns. It involves developing mathematical models that capture relationships between variables and use them to make predictions about unseen data points. Predictive models can be applied to various domains, including finance, healthcare, marketing, and more, to forecast trends, identify risks, and guide decision-making processes.

Importance of Predictive Modeling

- Enhanced Decision Making: Predictive modeling enables organizations to make data-driven decisions by providing insights into future trends and outcomes.

- Risk Management: By forecasting potential risks and opportunities, predictive modeling helps businesses mitigate risks and capitalize on opportunities effectively.

- Optimized Operations: Predictive models can optimize operational processes by predicting demand, optimizing inventory levels, and improving resource allocation.

- Improved Customer Engagement: By analyzing customer behavior and preferences, predictive modeling allows businesses to personalize marketing campaigns, improve customer satisfaction, and increase retention rates.

Applications in Various Industries

- Finance: Predictive modeling is used in finance for credit scoring, fraud detection, stock price forecasting, and risk management.

- Healthcare: In healthcare, predictive modeling is applied for disease diagnosis, patient risk stratification, treatment optimization, and healthcare resource allocation.

- Marketing: Predictive modeling helps marketers segment customers, personalize marketing campaigns, forecast sales, and optimize advertising spending.

- Retail: In retail, predictive modeling is used for demand forecasting, inventory optimization, customer churn prediction, and pricing optimization.

- Manufacturing: Predictive modeling is employed in manufacturing for predictive maintenance, quality control, supply chain optimization, and production scheduling.

- Telecommunications: Telecommunication companies use predictive modeling for customer churn prediction, network optimization, and resource allocation.

- Energy: Predictive modeling is used in the energy sector for energy demand forecasting, equipment maintenance, and energy consumption optimization.

By leveraging predictive modeling techniques, organizations can gain valuable insights, improve decision-making processes, and gain a competitive edge in today's data-driven world.

Fundamentals of Predictive Modeling

Predictive modeling is built upon a solid foundation of understanding data, preprocessing techniques, exploratory data analysis (EDA), and feature engineering. Let's delve into each of these fundamental aspects to equip you with the necessary knowledge to embark on your predictive modeling journey.

Understanding Data

Before you begin building predictive models, you must have a deep understanding of the data you're working with. This involves not only knowing what type of data you have but also where it comes from and its inherent characteristics.

- Data Types: Data can be broadly categorized as numerical, categorical, or textual. Understanding the nature of your data is essential for selecting appropriate modeling techniques.

- Data Quality: Assessing the quality of your data is crucial. This involves identifying and handling missing values, outliers, and inconsistencies to ensure the reliability of your models.

- Data Source: Knowing the source of your data and how it was collected provides context and helps in making informed decisions throughout the modeling process.

As you navigate through the complexities of understanding data and preparing it for predictive modeling, consider leveraging powerful tools like Appinio for seamless data collection. With Appinio, you can effortlessly gather real-time consumer insights, enabling you to enrich your datasets and enhance the accuracy of your predictive models.

Book a demo today to see how Appinio can streamline your data collection process and supercharge your predictive modeling endeavors!

Data Preprocessing Techniques

Data preprocessing is a vital step in predictive modeling aimed at preparing raw data for analysis and modeling. Several techniques are employed during this phase to clean and transform the data.

- Data Cleaning: This involves removing or correcting errors in the data, such as missing values, duplicates, or inaccuracies. Imputation methods can be used to fill in missing values based on statistical measures or domain knowledge.

- Data Transformation: Data often requires transformation to make it suitable for modeling. This includes encoding categorical variables into numerical representations, scaling numerical features to a common range, and handling skewness or non-normality through transformations like log or Box-Cox.

- Feature Engineering: Feature engineering is the process of creating new features or transforming existing ones to enhance the model's predictive power. This could involve creating interaction terms, deriving new features from existing ones, or encoding temporal information.

Exploratory Data Analysis (EDA)

Exploratory Data Analysis (EDA) is a crucial step that involves visually exploring and analyzing the data to uncover patterns, trends, and relationships. EDA not only helps in understanding the data better but also guides subsequent modeling decisions.

- Summary Statistics: Calculate descriptive statistics such as mean, median, variance, and quartiles to summarize the central tendency and dispersion of the data.

- Data Visualization: Visualizations such as histograms, box plots, scatter plots, and heatmaps provide insights into the distribution and relationships between variables.

- Correlation Analysis: Identify correlations between variables to understand how they are related. Correlation matrices or scatterplot matrices can be used to visualize pairwise relationships.

Feature Engineering

Feature engineering is a critical aspect of predictive modeling, as the quality of features directly impacts the model's performance. Effective feature engineering involves creating new features or transforming existing ones to capture relevant information and patterns in the data.

- Feature Creation: Generating new features through techniques such as binning, polynomial features, or domain-specific transformations can enhance the predictive power of the model.

- Feature Selection: Selecting the most relevant features is essential for model simplicity and performance. Techniques such as univariate feature selection, recursive feature elimination, or model-based feature selection can be employed.

- Dimensionality Reduction: High-dimensional data can pose challenges for predictive modeling. Dimensionality reduction techniques like Principal Component Analysis (PCA) or t-distributed Stochastic Neighbor Embedding (t-SNE) can help reduce the number of features while preserving essential information.

By mastering these fundamental concepts and techniques, you'll be well-equipped to tackle the challenges of predictive modeling and derive actionable insights from your data.

Model Selection and Evaluation

Selecting the proper predictive model and evaluating its performance are critical steps in the predictive modeling process. Let's explore the various aspects of model selection and evaluation to help you make informed decisions and ensure the effectiveness of your predictive models.

Types of Predictive Models

Predictive modeling encompasses a wide range of algorithms and techniques, each suited to different types of data and prediction tasks. Understanding the various types of predictive models can help you choose the most appropriate one for your specific application.

- Regression Models: Regression models are used when the target variable is continuous. They aim to predict a numerical value based on input features. Examples include linear regression, polynomial regression, and support vector regression.

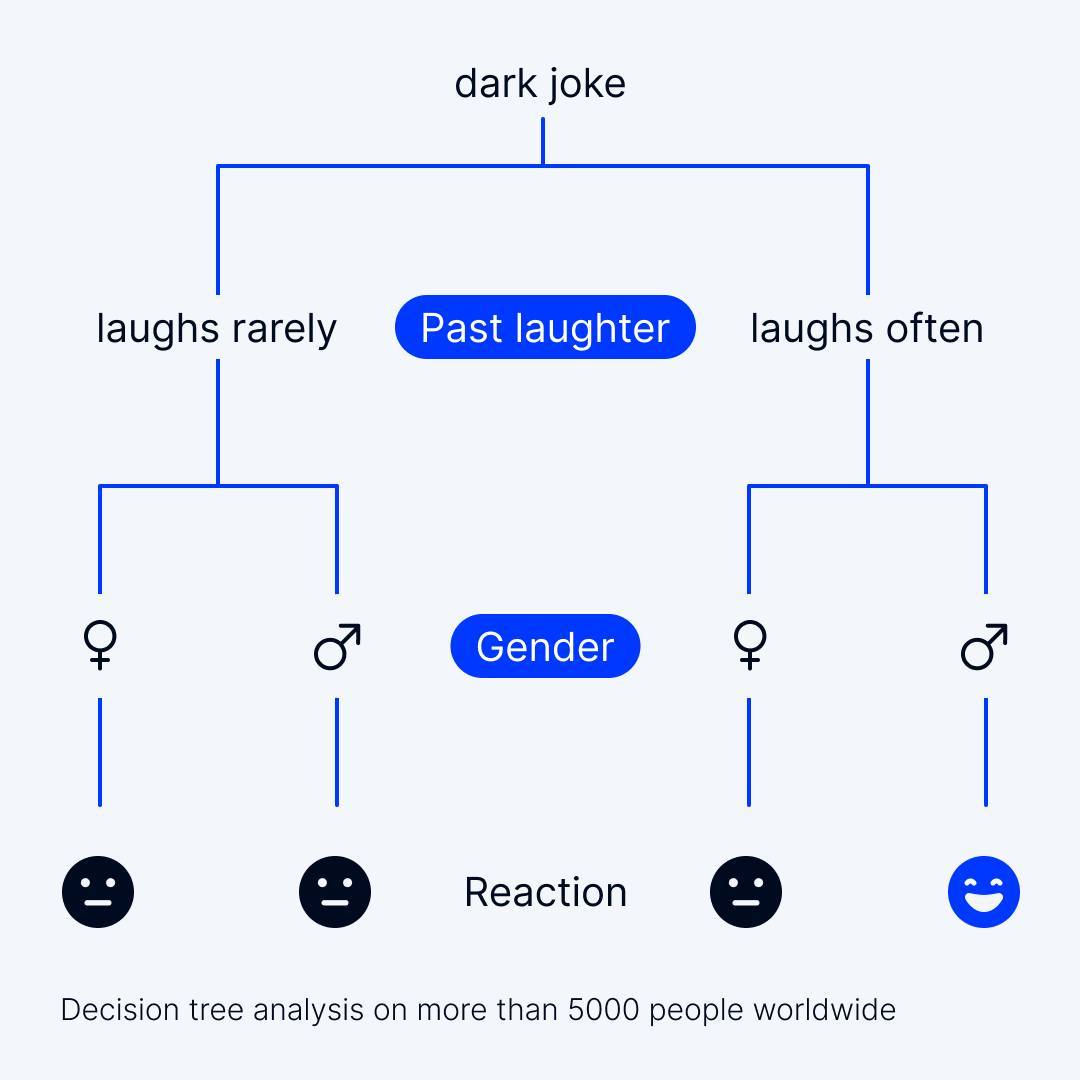

- Classification Models: Classification models are employed when the target variable is categorical, with two or more classes. These models assign class labels to input instances based on their features. Common classification algorithms include logistic regression, decision trees, random forests, and support vector machines.

- Clustering Models: Clustering models are unsupervised learning techniques used to group similar data points together based on their characteristics. K-means clustering and hierarchical clustering are popular clustering algorithms used in predictive modeling.

- Time Series Models: Time series models are specialized algorithms designed to analyze and forecast time-dependent data. They are commonly used in finance, economics, and weather forecasting to predict future values based on historical patterns.

Criteria for Model Selection

When selecting a predictive model for your data and problem domain, various factors must be considered to ensure optimal performance and interpretability.

- Accuracy: The primary goal of predictive modeling is to build models that make accurate predictions on unseen data. Models with higher accuracy are preferred, but it's essential to avoid overfitting the training data.

- Interpretability: Depending on the application, the interpretability of the model may be crucial. Simple models like linear regression are easy to interpret but may sacrifice predictive performance compared to more complex models like neural networks.

- Scalability: Consider the model's scalability to handle large datasets and real-time prediction tasks. Some algorithms, such as support vector machines, may struggle with scalability compared to simpler models like decision trees.

- Domain Knowledge: Leveraging domain knowledge can inform model selection and feature engineering. Understanding the underlying processes and relationships in the data can guide the choice of appropriate algorithms and features.

Cross-Validation Techniques

Cross-validation is a robust method for estimating the performance of predictive models and selecting hyperparameters without the need for a separate validation set. Several cross-validation techniques are commonly used in practice.

- K-Fold Cross-Validation: In K-fold cross-validation, the dataset is divided into K subsets or folds. The model is trained on K-1 folds and evaluated on the remaining fold, repeating this process K times. The performance metrics are then averaged across the K iterations.

- Leave-One-Out Cross-Validation (LOOCV): LOOCV is a special case of K-fold cross-validation where K equals the number of instances in the dataset. For each iteration, one data point is left out as the validation set, and the model is trained on the remaining data points.

- Stratified Cross-Validation: Stratified cross-validation ensures that each fold contains a proportional representation of the different classes in the dataset, making it particularly useful for imbalanced classification tasks.

Performance Metrics

Evaluating the performance of predictive models requires the use of appropriate metrics that quantify the model's predictive accuracy and effectiveness.

- Accuracy: Accuracy measures the proportion of correctly predicted instances out of the total instances. While accuracy is a standard metric, it may not be suitable for imbalanced datasets, where one class is significantly more prevalent than the others.

- Precision and Recall: Precision measures the proportion of true positive predictions among all positive predictions, while recall measures the proportion of true positive predictions among all actual positive instances. Precision and recall are particularly useful for evaluating classification models, especially in scenarios where false positives or false negatives have different implications.

- F1 Score: The F1 score is the harmonic mean of precision and recall and provides a balance between the two metrics. It is particularly useful for imbalanced datasets where accuracy alone may be misleading.

By understanding the different types of predictive models, criteria for model selection, cross-validation techniques, and performance metrics, you can effectively evaluate and choose the most suitable models for your predictive modeling tasks.

How to Build a Predictive Model?

Building predictive models involves selecting appropriate algorithms and techniques to analyze data and make predictions. Let's explore the various approaches to building predictive models, including supervised learning algorithms, unsupervised learning algorithms, and ensemble methods.

Supervised Learning Algorithms

Supervised learning algorithms are trained on labeled data, where the target variable is known, and the goal is to learn a mapping from input features to the target variable. These algorithms are widely used for prediction and classification tasks.

Regression Models

Regression models are used when the target variable is continuous and numerical. These models aim to predict a numerical value based on input features. Popular regression algorithms include:

- Linear Regression: Linear regression models the relationship between the input features and the target variable using a linear equation. It is simple, interpretable, and often serves as a baseline model.

- Decision Trees: Decision trees partition the feature space into hierarchical decision rules based on feature values. They are intuitive and easy to interpret but may suffer from overfitting.

- Support Vector Regression (SVR): SVR is a variant of support vector machines (SVM) used for regression tasks. It aims to find the hyperplane that maximizes the margin while minimizing the error.

Classification Models

Classification models are used when the target variable is categorical, with two or more classes. These models assign class labels to input instances based on their features. Common classification algorithms include:

- Logistic Regression: Logistic regression is used for binary classification tasks where the target variable has two possible outcomes. It models the probability of belonging to a particular class using the logistic function.

- Random Forest: Random forest is an ensemble learning method that combines multiple decision trees to improve predictive performance. It reduces overfitting and is robust to noise and outliers.

- Gradient Boosting Machines (GBM): GBM sequentially builds a series of weak learners, each focusing on the mistakes made by its predecessors. It combines their predictions to make a final prediction, achieving high accuracy.

Unsupervised Learning Algorithms

Unsupervised learning algorithms are trained on unlabeled data, with the goal of discovering hidden patterns or structures within the data. These algorithms are commonly used for clustering, dimensionality reduction, and anomaly detection.

Clustering Models

Clustering models partition the data into groups or clusters based on the similarity of instances. Each cluster contains data points that are more similar to each other than to those in other clusters. Common clustering algorithms include:

- K-Means Clustering: K-means is a partitioning algorithm that divides the data into K clusters by minimizing the within-cluster variance. It is efficient and easy to implement but requires specifying the number of clusters in advance.

- Hierarchical Clustering: Hierarchical clustering builds a tree-like hierarchy of clusters by recursively merging or splitting clusters based on their proximity. It does not require specifying the number of clusters beforehand and helps visualize cluster relationships.

Dimensionality Reduction

Dimensionality reduction techniques aim to reduce the number of features in the dataset while preserving essential information. This can help reduce computational complexity, remove noise, and visualize high-dimensional data. Common dimensionality reduction algorithms include:

- Principal Component Analysis (PCA): PCA is a linear dimensionality reduction technique that identifies the principal components of the data, which capture the directions of maximum variance. It projects the data onto a lower-dimensional subspace while retaining most of the variability.

- t-Distributed Stochastic Neighbor Embedding (t-SNE): t-SNE is a nonlinear dimensionality reduction technique that emphasizes preserving the local structure of the data. It is commonly used for visualizing high-dimensional data in low-dimensional space, particularly in clustering analysis.

Ensemble Methods

Ensemble methods combine multiple base models to improve predictive performance and robustness. By aggregating the predictions of diverse models, ensemble methods can achieve higher accuracy than individual models.

- Bagging (Bootstrap Aggregating): Bagging trains multiple instances of the same base model on different bootstrap samples of the training data and aggregates their predictions by averaging (for regression) or voting (for classification).

- Boosting: Boosting sequentially builds a series of weak learners, with each learner focusing on the mistakes made by its predecessors. Gradient boosting and AdaBoost are popular boosting algorithms used in predictive modeling.

- Stacking: Stacking combines the predictions of multiple base models using a meta-learner, often a simple linear model. It leverages the complementary strengths of different models to improve predictive performance further.

By leveraging supervised and unsupervised learning algorithms, as well as ensemble methods, you can build powerful predictive models capable of making accurate predictions and uncovering valuable insights from your data.

Predictive Modeling Examples

To better understand predictive modeling's practical applications, let's explore some real-world examples of how it has been successfully employed to solve complex problems and drive decision-making processes.

Customer Churn Prediction in Telecom

Telecommunication companies use predictive modeling to identify customers who are likely to churn or cancel their subscriptions. By analyzing customer behavior, usage patterns, and demographics, telecom companies can build predictive models to forecast churn risk and implement targeted retention strategies, such as personalized offers or proactive customer service interventions, to reduce churn rates and improve customer satisfaction.

Fraud Detection in Finance

Banks and financial institutions leverage predictive modeling to detect fraudulent transactions and activities. By analyzing historical transaction data, user behavior, and other relevant features, predictive models can identify anomalous patterns indicative of fraudulent behavior, such as unauthorized access, identity theft, or unusual spending patterns. Fraud detection models help financial institutions minimize losses, protect customers' assets, and maintain trust in their services.

Healthcare Risk Prediction

Predictive modeling plays a crucial role in healthcare for risk prediction and disease diagnosis. For example, predictive models can analyze electronic health records (EHRs), medical imaging data, and genetic information to assess patients' risk of developing certain diseases or conditions, such as diabetes, cardiovascular diseases, or cancer. These models enable early intervention, personalized treatment plans, and better patient outcomes by identifying at-risk individuals and prioritizing preventive measures.

Demand Forecasting in Retail

Retailers use predictive modeling to forecast future demand for products and optimize inventory management and supply chain operations. By analyzing historical sales data, seasonality patterns, promotional activities, and external factors like weather or economic conditions, predictive models can accurately predict future sales volumes. Demand forecasting models help retailers minimize stockouts, reduce excess inventory, and improve overall operational efficiency and profitability.

These examples illustrate the diverse applications of predictive modeling across various industries, demonstrating its potential to drive actionable insights, improve decision-making, and enhance business outcomes.

Model Interpretation and Deployment

Once you've built predictive models, the next steps involve interpreting their results effectively and deploying them in real-world applications. Let's explore how to interpret model results, strategies for deploying models, and the importance of monitoring and maintenance.

Interpreting Model Results

Interpreting model results is essential for understanding how your model makes predictions and communicating its insights effectively. Several techniques can help you analyze the results of your predictive models.

- Feature Importance: Understanding which features have the most significant impact on predictions can provide valuable insights into the underlying relationships in the data. Techniques such as permutation importance, SHAP values, or coefficients in linear models can help quantify feature importance.

- Partial Dependence Plots: Partial dependence plots visualize the relationship between a feature and the predicted outcome while marginalizing over the values of other features. They can help identify how changes in a particular feature affect the model's predictions.

- Model Explanation Techniques: Model explanation techniques, such as LIME (Local Interpretable Model-agnostic Explanations) or SHAP (SHapley Additive exPlanations), provide local or global explanations for individual predictions, helping to understand the decision-making process of complex models like neural networks.

Model Deployment Strategies

Deploying predictive models in production environments requires careful planning and consideration of various factors, including scalability, reliability, and integration with existing systems.

- Scalability: Ensure that your deployed model can handle high volumes of incoming data and requests efficiently. This may involve deploying the model on scalable infrastructure such as cloud platforms or using techniques like model parallelism.

- Real-time vs. Batch Processing: Decide whether your model needs to make real-time predictions or if batch processing is sufficient. Real-time applications require low latency and high throughput, while batch processing allows for offline analysis of large datasets.

- API Integration: Expose your model as an API (Application Programming Interface) to allow other systems and applications to interact with it. RESTful APIs are commonly used for this purpose and provide a standardized way for clients to communicate with the model.

- Model Versioning: Implement a system for versioning your models to track changes and ensure reproducibility. This allows you to roll back to previous versions if needed and maintain consistency across different environments.

Monitoring and Maintenance

Once deployed, predictive models require ongoing monitoring and maintenance to ensure they continue to perform optimally and remain relevant over time.

- Performance Monitoring: Monitor the performance of your deployed model regularly to detect any degradation in accuracy or predictive power. Set up automated alerts for anomalies or deviations from expected behavior.

- Data Drift Detection: Data drift occurs when the distribution of incoming data changes over time, leading to a mismatch between the training and deployment data. Implement data drift detection mechanisms to identify and address these changes promptly.

- Model Retraining: Periodically retrain your model using fresh data to ensure it stays up-to-date and adapts to evolving patterns and trends in the data. Establish a retraining schedule based on the rate of data change and the criticality of the model.

- Feedback Loops: Incorporate feedback loops into your deployment pipeline to collect user feedback and ground truth labels for predictions. This feedback can be used to continuously improve the model and address any biases or errors.

By effectively interpreting model results, deploying models using robust strategies, and implementing monitoring and maintenance practices, you can ensure the successful deployment and long-term effectiveness of your predictive models in real-world applications.

Predictive Modeling Tools and Libraries

Choosing the right software and libraries is crucial for effectively implementing predictive modeling solutions. Numerous tools are available, each offering unique features and capabilities. Let's explore some of the popular software and libraries commonly used in predictive modeling.

Python Libraries

Python has emerged as a dominant language in the field of data science and machine learning, thanks to its extensive ecosystem of libraries and frameworks. Some of the most widely used Python libraries for predictive modeling include:

- scikit-learn: scikit-learn is a comprehensive machine learning library that provides simple and efficient data mining and analysis tools. It offers various algorithms for classification, regression, clustering, dimensionality reduction, and model selection.

- TensorFlow: Developed by Google, TensorFlow is an open-source machine learning framework widely used for building and training deep learning models. It provides a flexible architecture for implementing various neural network architectures and scales from individual devices to distributed systems.

- PyTorch: PyTorch is another popular deep learning framework known for its dynamic computational graph and ease of use. It offers a rich ecosystem of libraries and tools for building and training neural networks, with support for both research and production-level deployments.

- XGBoost and LightGBM: XGBoost and LightGBM are gradient boosting libraries known for their high performance and scalability. They are widely used for building ensemble models and achieving state-of-the-art results in predictive modeling competitions and real-world applications.

R Packages

R is a programming language and environment specifically designed for statistical computing and graphics. It offers a vast repository of packages for various statistical and predictive modeling tasks. Some popular R packages for predictive modeling include:

- caret: caret (Classification And REgression Training) is a comprehensive package for building and evaluating predictive models in R. It provides a unified interface for training and tuning a wide range of machine learning algorithms.

- randomForest: randomForest is an R package that implements the random forest algorithm for classification and regression tasks. It constructs an ensemble of decision trees and aggregates their predictions to improve accuracy and robustness.

- glmnet: glmnet is a package for fitting generalized linear models with Lasso or elastic-net regularization. It is beneficial for high-dimensional data and feature selection tasks where the number of predictors exceeds the number of observations.

- keras: keras is an R interface to the Keras deep learning library, which provides a high-level API for building and training neural networks. It offers a user-friendly interface and seamless integration with TensorFlow backend for developing deep learning models.

Other Tools and Platforms

In addition to Python and R libraries, several other tools and platforms offer capabilities for predictive modeling:

- Microsoft Azure Machine Learning: Azure Machine Learning is a cloud-based platform for building, training, and deploying machine learning models at scale. It provides a range of tools and services for data preparation, model development, and operationalization.

- Amazon SageMaker: Amazon SageMaker is a fully managed service that enables developers and data scientists to build, train, and deploy machine learning models quickly and easily. It offers built-in algorithms, pre-built notebooks, and scalable infrastructure for end-to-end ML workflows.

- Google AI Platform: Google AI Platform is a cloud-based platform that provides tools and services for building and deploying machine learning models on Google Cloud. It offers managed services for training and serving models, as well as integration with TensorFlow and other popular ML frameworks.

Choosing the right software and libraries depends on factors such as programming language preference, project requirements, and scalability considerations.

Conclusion for Predictive Modeling

Predictive modeling is a powerful tool that allows businesses to unlock valuable insights from data and make informed decisions about the future. By leveraging statistical algorithms and machine learning techniques, predictive models can forecast trends, identify risks, and optimize processes across various industries. From predicting customer behavior to detecting fraudulent activities, the applications of predictive modeling are diverse and far-reaching. By mastering the fundamentals of data analysis, model selection, and interpretation, you can harness the potential of predictive modeling to drive innovation, improve efficiency, and stay ahead of the competition in today's data-driven world.

However, it's crucial to approach predictive modeling with caution and diligence, as even the most sophisticated models are not immune to errors or biases. Continuous monitoring, validation, and refinement of models are essential to ensure their accuracy and relevance over time. Additionally, effective communication of model results and limitations is crucial for building trust and facilitating informed decision-making within organizations. By adopting a systematic and collaborative approach to predictive modeling, businesses can unlock their full potential and derive actionable insights that drive success in the dynamic and ever-evolving landscape of modern business.

How to Easily Collect Data for Predictive Modeling?

Experience the power of Appinio's real-time market research platform for predictive modeling. With Appinio, you can conduct your own market research in minutes, gaining valuable consumer insights to fuel your predictive models.

Here's why you should choose Appinio:

- From questions to insights in minutes: Get actionable data quickly to inform your predictive modeling decisions.

- Intuitive platform: No need for a PhD in research – our platform is designed for ease of use.

- Rapid response time: With an average field time of less than 23 minutes for 1,000 respondents, you can gather data efficiently.

Get facts and figures 🧠

Want to see more data insights? Our free reports are just the right thing for you!